DataCite services

Locate, identify, and cite research data with DataCite, a global provider of DOIs for research data.https://www.datacite.org/

(less services than crossRef).

http://stephane-mottin.blogspot.fr/2017/01/datacite-inist-cern-metadata-schema.html

DOI handbook

http://www.doi.org/hb.html

In order to create new DataCite DOIs and assign them to your content, it is necessary to become a DataCite member or work with one of the current members.

Through the web interface or the API of the DataCite Metadata Store you will be able to submit a name, a metadata description following the DataCite Metadata Schema and at least one URL of the object to create a DOI. Once created, information about a DOI is available through our different services search, event data, OAI-PMH and others).

The DataCite Metadata Store is a service for data publishers to mint DOIs and register associated metadata. The service requires organisations to first register for an account with a DataCite member.

https://mds.datacite.org/

http://schema.datacite.org/

status of the services

http://status.datacite.org/

https://blog.datacite.org/

Services of DataCite profiles

DataCite Profiles integrates DataCite services from a user’s perspective and provides tools for personal use. In particular, it is a key piece of integration with ORCID, where researchers can connect their profiles and automatically update their ORCID record when any of their works contain a DOI.https://profiles.datacite.org/

example

https://profiles.datacite.org/users/0000-0002-7088-4353

0000-0002-7088-4353 is my ORCID Id.

You can get

- your ORCID Token

- your API Key

(if you want to use the ORCID API).

In your profile, you can select how to connect:

You can also follow ORCID claims.

For example: "You have 5 successful claims, 0 notification claims, 0 queued claims and 0 failed claims"

You are also linked to Impactstory

Impactstory is an open-source website that helps researchers explore and share the the online impact of their research.

https://impactstory.org/u/0000-0002-7088-4353

0000-0002-7088-4353 is my ORCID id.

You must authorize impactstory.org to link with your ORCID.

You must also connect impactstory.org with your twitter.

In impactstory, Zenodo from ORCID are "datasets".

Zenodo and DataCite METADATA

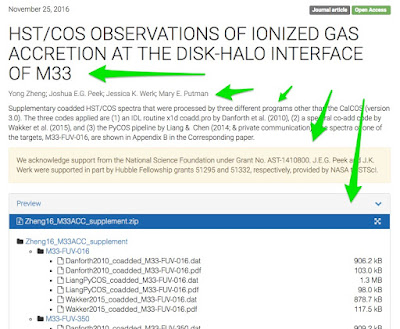

If you use ZENODO, an Open Archive with DataCite DOI, DataCite services are interesting.

Zenodo gives a DataCite DOI and an export to a clean datacite METADATA (XML DataCite 3.1).

(see also my posts on this blog with the tag "zenodo")

Zenodo DataCite and Orcid

If you use ZENODO and your ORCID Id then you have some services:

You must allow Zenodo to "Get your ORCID iD".

You must allow DataCite to allow ORCID "Add works"

But only 5 METADATA are automatically sent to ORCID by DataCite.

You can add metadata in ORCID...

Change type 'Work category' and 'Work type'.

Then Source is changed from 'zenodo' to 'Stéphane MOTTIN'

In impactstory, Zenodo links (from ORCID) are considered as "datasets" with only 4 METADATA

- Title,

- Year,

- Description (the full field of Zenodo),

- Contributor (the field 'creator' of Zenodo = authors),

- DOI

You can add metadata in ORCID...

Change type 'Work category' and 'Work type'.

Then Source is changed from 'zenodo' to 'Stéphane MOTTIN'

In impactstory, Zenodo links (from ORCID) are considered as "datasets" with only 4 METADATA

- Title,

- Year,

- Contributor (the field 'creator' of Zenodo = authors),

- DOI

ORCID

You can see the list of ORCID "trusted organization"

at https://orcid.org/account

DataCite can 'add works'.

at https://orcid.org/account

DataCite can 'add works'.

For other ORCID Search & link wizards:

http://support.orcid.org/knowledgebase/articles/188278-link-works-to-your-orcid-record-from-another-syste

for example

http://support.orcid.org/knowledgebase/articles/188278-link-works-to-your-orcid-record-from-another-syste

for example

- The Crossref Metadata Search integration allows you to search and add works by title or DOI. Once you have authorized the connection and are logged into ORCID, Crossref search results will also include a button to add works to your ORCID record.

http://search.crossref.org/ - The DataCite integration allows you to find your research datasets, images, and other works. Recommended for locating works other than articles and works that can be found by DOI.

- The ISNI2ORCID integration allows you to link your ORCID and ISNI records and can be used to import books associated with your ISNI. Recommended for adding books.

http://isni2orcid.labs.orcid-eu.org/ - Use this tool to link your ResearcherID account and works from it to your ORCID record, and to send biographical and works information between ORCID and ResearcherID.

http://wokinfo.com/researcherid/integration/ - Use this wizard to import works associated with your Scopus Author ID; see Manage My [Scopus] Author Profile for more information. Recommended for adding multiple published articles to your ORCID record.

https://www.elsevier.com/solutions/scopus/support/authorprofile